Spring Specials Have Arrived!

Take advantage of our special Bundles that will help you build your web projects in no time. Pick your favorite one.

Featured Products

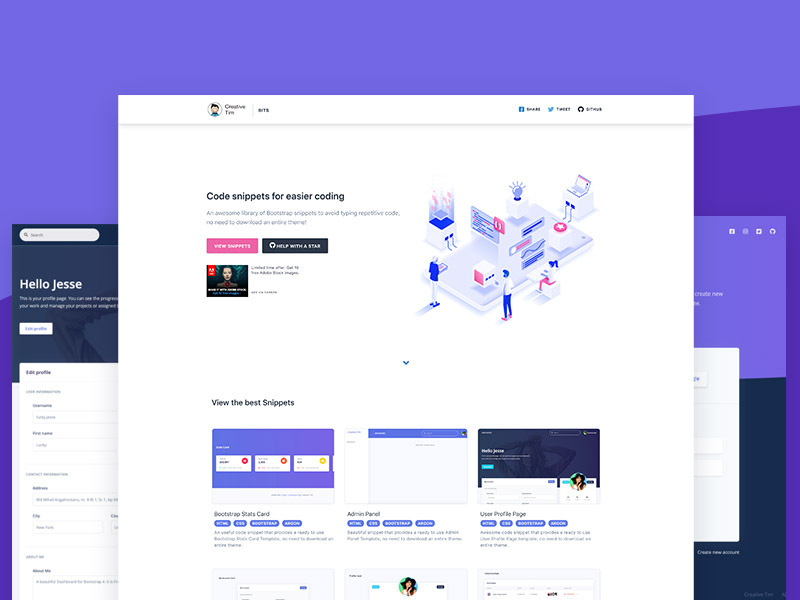

Browse this month’s best selling themes

The best web themes and templates have arrived.

See all products

Fundamentals of Creating a

Great UI/UX

by

After 8 years of crafting next generation's web design tools, UI Kits, Admin Dashboards and Mobile App Templates, we decided to wrote this UI/UX book-guide based on our experience.

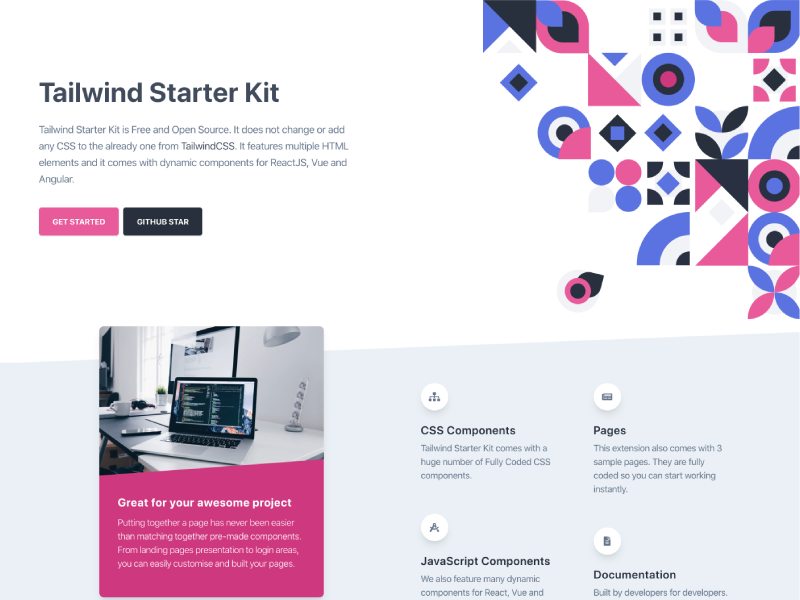

View detailsMost Popular Freebies

Browse this month’s best freebies themes

The best web themes and templates have arrived.

See all productsLatest Products

Spring Specials Have Arrived!